Modern teams generate more data than ever, yet many still rely on scattered spreadsheets, inconsistent structures, and tools that can’t scale beyond early prototypes. As organizations move toward automation, AI-driven insights, and multi-step workflows, the need for clear data foundations becomes critical. This is where strong data modeling, smooth migration, and scalable systems converge — forming the backbone of high-quality digital operations.

The goal of this guide is to simplify the topic so it’s accessible to both technical and non-technical audiences. Whether you’re designing your first relational structure, migrating thousands of rows from spreadsheets, or building something that needs to scale to millions, this article will help clarify how to think about data modeling while also showing where modern no-code platforms like Baserow fit in naturally.

Why Data Modeling Matters for Modern Digital Teams

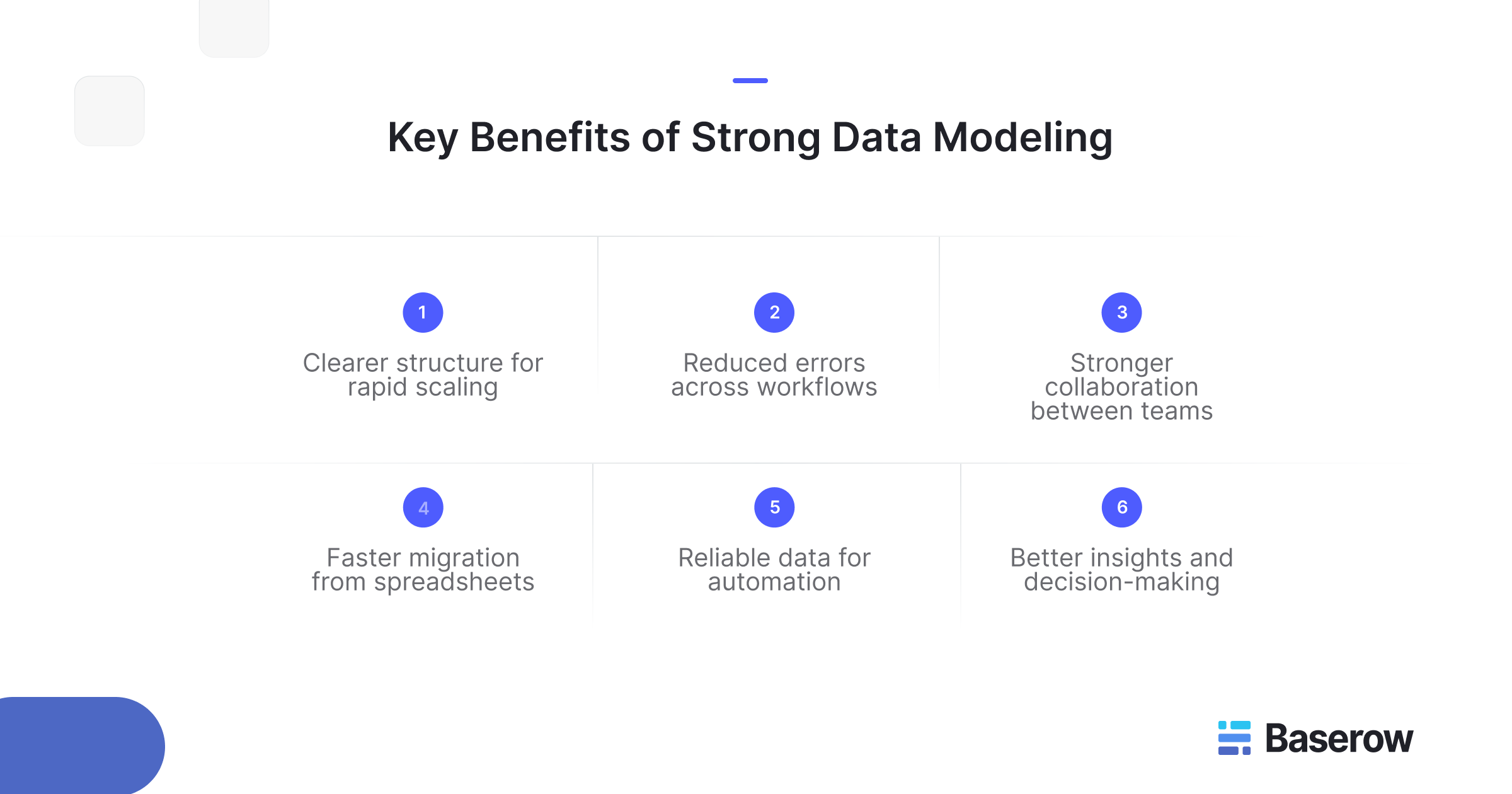

Data modeling defines how information should be structured so it can be stored, queried, and used reliably across systems. At its core, it provides a shared language between business stakeholders and technical teams — ensuring everyone understands the relationships between data sets, the rules governing them, and how they support workflows.

For many organizations, the pressure comes from new product requirements, regulatory expectations, or analytics needs that outgrow the limitations of traditional files. A clear conceptual model helps remove ambiguity. Instead of guessing which column means what, teams gain structured clarity, enabling seamless collaboration across product managers, business analysts, designers, and engineers.

A well-structured system also supports the long-term health of a database management system. Whether the final application uses APIs, interfaces, automations, or AI workflows, the underlying structure only works if the data foundation is clean. This is why companies increasingly prioritize unified modeling strategies instead of ad-hoc spreadsheet experimentation.

Baserow, which offers a spreadsheet-style interface on top of a relational backend, helps bridge the gap for teams who need structure without writing SQL. Its approach makes it easier to shift from fragmented data to a more robust format. The platform overview explains how teams align around shared data modeling practices without technical friction.

The Core Challenges Teams Face With Data Modeling & Migration

Migrating Away From Spreadsheets at Scale

Most teams begin in spreadsheets — flexible, practical, and familiar. But as soon as multiple teams collaborate, relationships matter, or data sets grow above hundreds of thousands of records, friction begins. Files become slow, formulas break, and errors spread quickly.

Migrating at scale requires tools that support fast, fault-tolerant importing. Many platforms throttle performance or fail midway through large loads. Resources such as the guide on replacing spreadsheets with a scalable tool outline how to transition safely from spreadsheets into relational structures while preserving context and ensuring long-term scalability.

Designing Relational Schemas Without SQL

Another major challenge is that many platforms require technical knowledge just to define table relationships. If business teams cannot create logical structures themselves, data quality suffers.

Guides like the How to Model Your Baserow Data article simplify the fundamentals so teams can model tables visually. This is valuable for teams who need the sophistication of relational databases but prefer modeling tools that are intuitive and collaborative.

Community examples on the Baserow Community also show real-world modeling scenarios from early-stage startups to enterprise data teams — highlighting patterns, mistakes, and best practices.

Scaling Systems Once Prototypes Grow

Most no-code tools enable fast prototyping, but very few maintain excellent performance as data loads increase. When teams need to support millions of rows, API integrations, or multi-step forms spanning multiple tables, performance bottlenecks appear.

Platforms that combine visual modeling with efficient storage engines provide a smoother path from prototype to production. Baserow’s API-first architecture, described in the guide on API-first no-code tools, helps ensure scalability without forcing teams to migrate again later when constraints tighten.

Understanding the Types of Data Models

Every data project — whether small or enterprise-scale — begins by understanding the types of data models available. Each exists to answer a different kind of question.

- Conceptual Data Models

A conceptual model focuses on high-level definitions and how data elements fit together. It is used by business teams to clarify meaning, scope, and purpose before technical details are added.

- Logical Data Models

Logical structures go a layer deeper. They define relationships, constraints, and attributes while remaining independent of any specific technology. This layer ensures teams know what the data should represent before thinking about how it will be stored.

- Physical Data Models

Physical data models describe how data is actually stored inside the database engine. They include indexing, storage format, and optimization details required by the physical model that will run in production.

Together, these reflect the full stack of conceptual logical and physical modeling. In some cases, teams also incorporate object oriented patterns for more complex applications.

Each layer plays a role in long-term reliability, especially when teams integrate analytics, automation, or business intelligence workflows.

The Data Modeling Process Explained

Every successful data project begins with a clear structure. This is where the data modeling process becomes essential, helping teams define how information should flow between tables, applications, and stakeholders. While the terminology can feel intimidating, the actual process of creating reliable models follows a simple, repeatable structure.

At its core, modeling is the process of translating business requirements into structured data entities. This step typically involves product owners, domain experts, and business analysts who collaborate to outline what the system needs to support. The goal is not to think about columns or indexing yet, but to understand the real-world objects the data represents.

Once the high-level structure is validated, the next step is mapping relationships. Teams define which elements connect, how they interact, and whether constraints or rules apply. This ensures that when the system is implemented, inconsistencies are avoided and relationships remain intact.

For many organizations working in no-code environments, visual modeling tools make this phase more intuitive. Guides such as How to Model Your Baserow Data explain best practices for structuring tables, linking fields, and organizing workflows within relational databases. These resources are especially valuable for teams transitioning from spreadsheets into structured relational formats.

The final stage is validating the model before deploying it into a production-ready system. This includes testing relationships, confirming field definitions, and aligning the structure with business stakeholders to ensure accuracy. For teams using Baserow, examples shared within the Baserow Community offer real-world insights into how others approach schema validation and refinement.

Choosing Tools for Reliable Migration & Scale

Once a data model is established, the next challenge is selecting tools that can support reliable migration and long-term scalability. Teams often underestimate how quickly datasets grow, particularly when automating workflows, connecting multiple systems, or implementing multi-step forms that capture structured data across several relational tables.

High-quality tools should support both database design and practical execution. This means enabling users to design relational structures visually while maintaining the performance and robustness of traditional database engines. Platforms like Baserow bridge this gap by combining a spreadsheet-style interface with a backend optimized for relational scalability — making it easier for non-technical users to manage structured systems without learning SQL.

Strong data management capabilities are also essential. Tools should provide predictable performance, safe importing, and the ability to handle millions of rows without degradation. Teams migrating from spreadsheets at scale can learn practical strategies from resources like How to Replace Spreadsheets With a Scalable Tool, which outlines common pitfalls and best practices during large migrations.

Scalability matters on the technical side as well. Many teams building automated workflows, AI-driven applications, or internal systems rely on fast, stable API performance. Baserow’s architecture, described in API-First No-Code Tools, supports this approach by offering predictable, scalable endpoints that integrate easily with operational systems.

How Baserow Supports High-Scale Modeling & Structured Migration

Although this guide is not a product comparison, it’s valuable to understand how modern platforms align with the challenges outlined. Baserow is one example of a system designed to support teams through modeling, migration, and scale without requiring extensive technical expertise.

- Relational Modeling With a Spreadsheet-Like UI

Teams can design relational structures visually, using linked tables and fields to represent real-world relationships. This makes it easier for business teams and technical users to collaborate on schema creation. Resources like the How to Model Your Baserow Data – Community Guidelines provide patterns and examples from real users.

- Scalable Imports for Large Data Sets

Users have successfully imported hundreds of thousands — and in some cases millions — of rows during migrations. Because Baserow supports efficient batch importing, teams transitioning from legacy spreadsheets or fragmented systems experience fewer bottlenecks.

One community example involved a financial operations team migrating 800,000+ transactional records from multiple Excel sources into a unified relational backend. After cleaning their data and designing a relational structure in Baserow, their workflows improved dramatically, and downstream automation became far easier to manage.

- API-First Architecture for Complex Forms & Workflows

For teams building multi-step forms, process automations, or business intelligence tooling, Baserow’s API-first design offers predictable performance and ensures systems can scale from early prototypes to production-ready applications. Because API endpoints remain consistent, teams can iterate on schemas without breaking external integrations.

This flexibility allows teams across product, operations, analytics, or engineering to evolve their backend as requirements grow without undergoing another migration.

%20(1).webp)

Use Case: Migrating From Spreadsheets to a Scalable Relational Backend

Consider a mid-sized operations team managing logistics data across multiple regions. Their system started in spreadsheets — dozens of files, inconsistent naming, manual copying, and error-prone reconciliation. As operations expanded, limitations became obvious: slow files, broken formulas, and no reliable way to track relationships between shipments, routes, and suppliers.

The team’s goal was to migrate into a unified platform that supported relational structures, multi-step forms, API-driven automations, and future analytics initiatives. After mapping their requirements, they used the Baserow data modeling guide to break their workflow into structured tables such as Shipments, Route Data, Supplier Profiles, and Delivery Metrics.

By testing the structure as a conceptual model and then refining it into a fully relational format, the team validated relationships before importing historical data. They used Baserow’s fast import pipeline to upload over one million rows without fragmentation — a task impossible to maintain in spreadsheets. Once complete, they connected their system to custom dashboards, automations, and AI forecasting tools through Baserow’s stable API layer, outlined in the API-first no-code guide.

This migration unlocked cleaner processes, more reliable reporting, and the ability to scale without repeated data restructuring — a clear example of how strong modeling principles improve operational resilience.

Frequently Asked Questions

- What are the 7 R’s of data migration?

The 7 R’s include: Rehost, Replatform, Repurchase, Refactor, Retire, Retain, and Rebuild. These help teams evaluate the best migration strategy depending on infrastructure, cost, and long-term needs. Each option supports different levels of modernization, from simple lift-and-shift moves to full architectural redesigns.

- How do you answer data modeling questions?

Focus on clarity, purpose, and structure. Explain how the model addresses business requirements, how entities relate, and why constraints or relationships were chosen. Referencing a high-level modeling framework helps establish a clear, structured rationale.

- What are the three main database migration strategies?

Teams typically choose between:

- Big Bang Migration (all at once),

- Trickle Migration (incremental), and

- Hybrid Migration (a controlled combination).

- The right choice depends on downtime tolerance, data volume, and system complexity.

- What are the 4 types of data modeling?

Common categories include conceptual models, logical models, physical models, and object-oriented structures. Each plays a role in defining meaning, organizing relationships, and determining the technical structure of a system.

- What are the three basic data modeling techniques?

The foundational techniques involve entity-relationship modeling, hierarchical modeling, and relational modeling. These help teams describe, link, and structure data in ways that support long-term reliability.

Conclusion

Strong data modeling, clear migration strategies, and scalable structures form the backbone of modern digital operations. Whether your team is building multi-step forms, prototyping new applications, or expanding into large-scale systems, structured, relational data ensures long-term reliability and reduces downstream complexity. Tools that combine visual modeling with a robust backend make this journey more accessible, especially when they help teams move smoothly from spreadsheets into scalable systems.

Platforms like Baserow align naturally with these needs by offering flexible modeling, fast imports, API-first design, and a spreadsheet-style interface that supports both business and technical teams. You can explore Baserow further in the product overview or learn modeling techniques from community discussions at the Baserow Community.

If you’re ready to build structured systems that can grow with your team, you can get started here:

Baserow 2.1 is a maintenance-focused release that improves performance, security, and reliability. It introduces Expert formula mode, Nuxt 3 and Django upgrades, bug fixes, PostgreSQL 14+ support for self-hosters, and a new Ukrainian translation.

Discover how Airtable and Baserow compare in features, flexibility, speed, and scalability. Compare pricing plans and hidden costs to make an informed decision!

Explore the best open-source software alternatives to proprietary products. Discover OSS tools, licenses, and use cases with our updated directory.